DeepSeek R1 is a sophisticated AI language mannequin that may be run domestically for enhanced privateness, velocity, and customization. By utilizing Ollama, a light-weight AI mannequin supervisor, you possibly can simply set up and run DeepSeek R1 in your system.

This information walks you thru:

- Putting in Ollama on macOS, Home windows, and Linux

- Downloading and operating DeepSeek R1 domestically

- Interacting with the mannequin utilizing easy instructions

By the top of this information, you’ll have the ability to arrange and use DeepSeek R1 effectively in your native machine.

What’s DeepSeek R1?

DeepSeek R1 is an open-source AI mannequin designed for pure language processing (NLP), chatbots, and textual content technology. It offers a substitute for cloud-based AI fashions like ChatGPT and Gemini, permitting customers to course of information domestically.

Uncover the important thing options and use circumstances of DeepSeek-R1 and discover its functions in AI and machine studying.

Why Run DeepSeek R1 Domestically?

| Profit | Description |

|---|---|

| Privateness | Retains information safe with out sending queries to exterior servers. |

| Velocity | Sooner response occasions with out counting on cloud servers. |

| Customization | Might be fine-tuned for particular duties or workflows. |

| Offline Entry | Works with out an web connection after set up. |

To run DeepSeek R1 domestically, you first want to put in Ollama, which acts as a light-weight AI mannequin runtime.

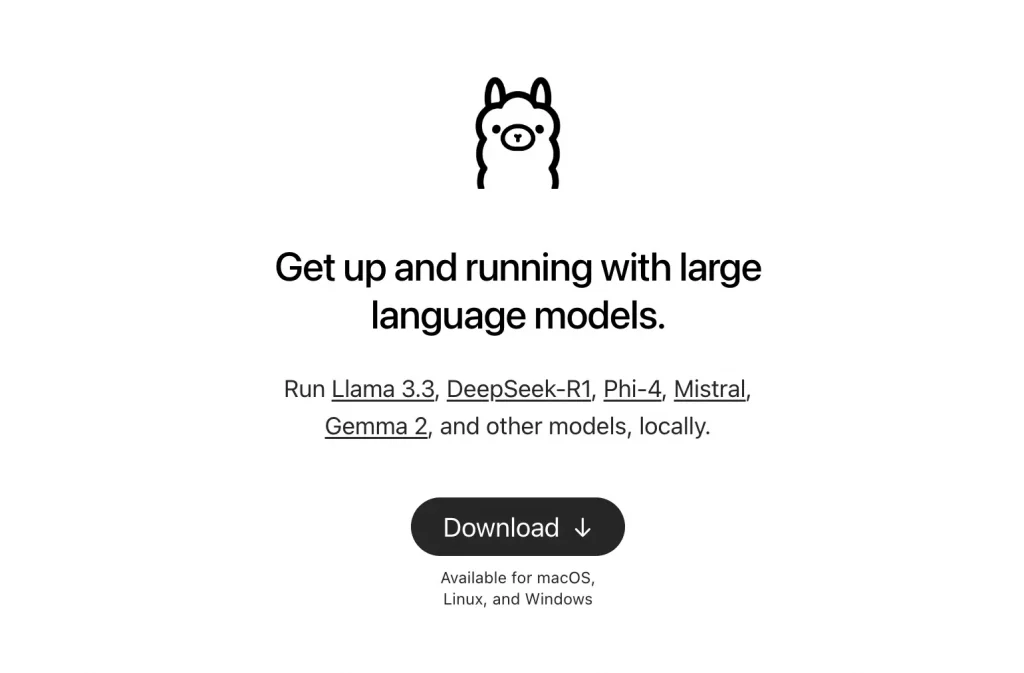

What’s Ollama?

Ollama is an AI mannequin administration device that simplifies operating giant language fashions domestically. It offers:

- Straightforward set up and setup – No complicated configurations are required.

- Environment friendly mannequin execution – Optimized for operating AI fashions on shopper {hardware}.

- Offline capabilities – As soon as downloaded, fashions can run with out an web connection.

Ollama acts as a light-weight AI mannequin runtime, permitting customers to pull, serve, and work together with AI fashions like DeepSeek R1 on their native machines.

Putting in Ollama:

Comply with these steps to put in Ollama in your system:

For macOS: Open Terminal and run:

If the Homebrew bundle supervisor isn’t put in, go to brew.sh and follow the setup directions.

For Home windows & Linux:

- Obtain Ollama from the official Ollama web site.

- Comply with the set up information in your working system.

Alternatively, Linux customers can set up it by way of Terminal:

curl -fsSL https://ollama.com/set up.sh | sh

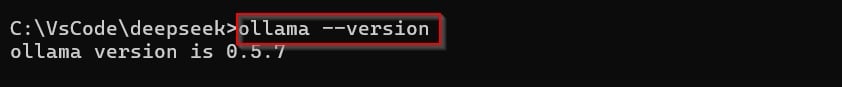

Now run this command to verify whether or not ollama is put in or not.

As soon as Ollama is efficiently put in, you possibly can proceed with establishing DeepSeek R1.

Steps to Run DeepSeek R1 Domestically on Ollama

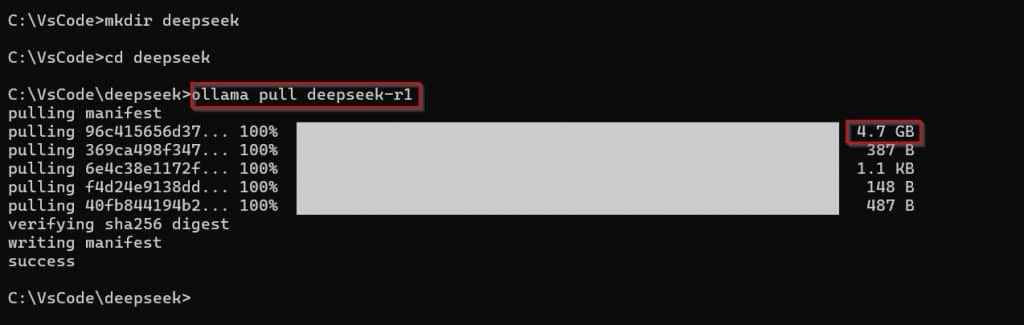

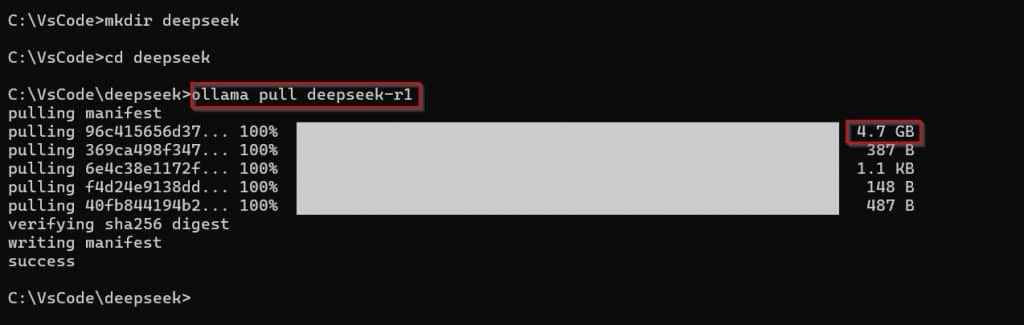

Step 1: Obtain the DeepSeek R1 Mannequin

To start utilizing DeepSeek R1, obtain the mannequin by operating:

For a smaller model, specify the mannequin measurement:

ollama pull deepseek-r1:1.5b

After downloading, you’re prepared to start out utilizing DeepSeek R1.

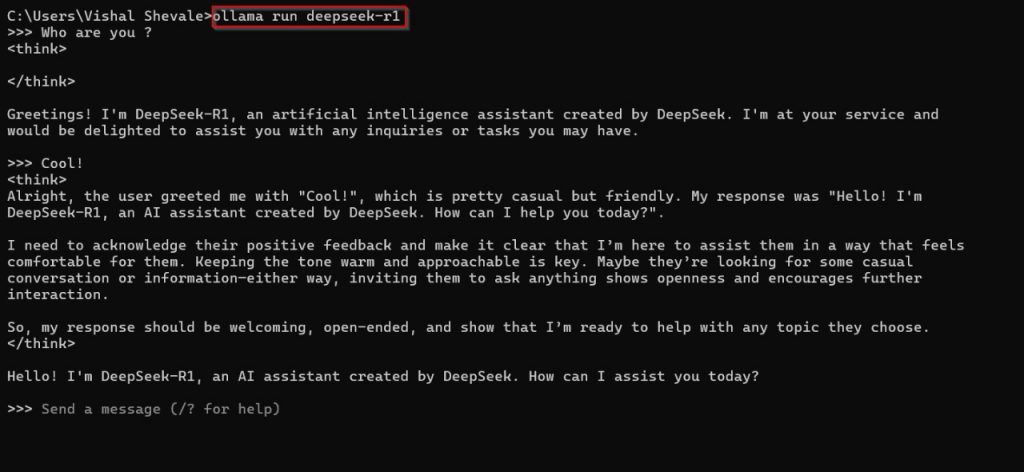

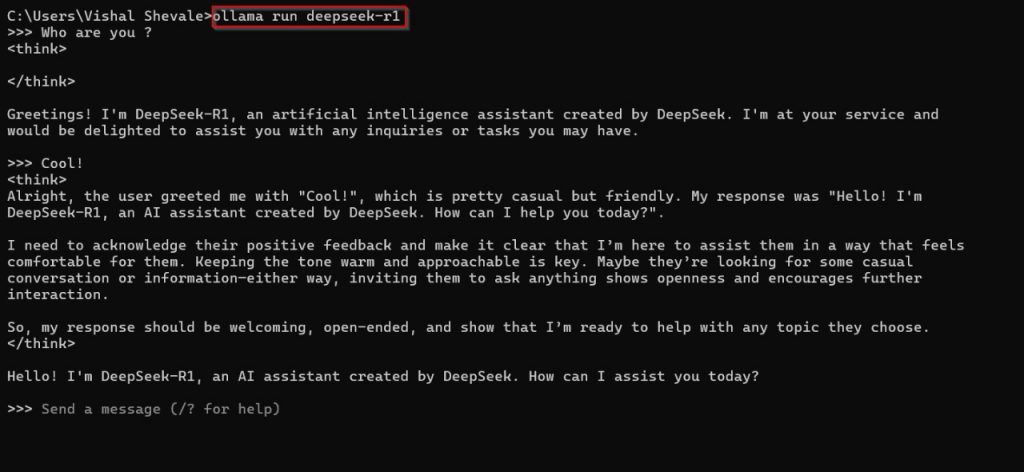

Step 2: Begin the Mannequin

The server will get mechanically began. If not, run:

Step 3: Work together with DeepSeek R1

With the mannequin operating, now you can work together with it within the terminal. Strive coming into a question:

Now, you’re going to get the response from the mannequin.

Troubleshooting Widespread Points

1. Ollama Not Discovered

Subject: Command Ollama not acknowledged.

Answer: Restart your terminal and confirm the set up by operating:

2. Mannequin Obtain Fails

Subject: Gradual obtain or errors when pulling DeepSeek R1.

Answer:

- Examine your web connection.

- Use a VPN in case your area has restrictions.

- Retry the command after a while.

Conclusion

Operating DeepSeek R1 domestically with Ollama offers you privateness, sooner processing, and offline accessibility. By following this information, you might have efficiently:

✅ Put in Ollama in your system.

✅ Downloaded and arrange DeepSeek R1 domestically.

✅ Run and work together with the mannequin by way of Terminal instructions.

For additional customization, discover Ollama’s documentation and fine-tune DeepSeek R1 for particular functions.

Additionally Learn:

Continuously Requested Questions

1. how a lot RAM and storage are required to run DeepSeek-R1 domestically?

To run the DeepSeek-R1 mannequin domestically, a minimal of 16GB of RAM and roughly 20GB of free space for storing on an SSD are required. For bigger DeepSeek fashions, further RAM, elevated storage, and doubtlessly a devoted GPU could also be needed.

2. How do I repair the “command not discovered” error for DeepSeek R1?

Guarantee Ollama is put in appropriately by operating ollama --version. Restart your terminal and confirm DeepSeek R1 exists utilizing ollama checklist. Reinstall Ollama if the problem persists.

3. Can I fine-tune DeepSeek R1 domestically?

Sure, DeepSeek R1 might be fine-tuned on native datasets, however it requires high-end GPU sources. Superior information of mannequin coaching is beneficial for personalization.

4. How do I uninstall DeepSeek R1 from my system?

Run ollama rm deepseek-r1 to take away the mannequin. To uninstall Ollama fully, comply with the official Ollama elimination information in your OS.

5. Does DeepSeek R1 assist a number of languages?

DeepSeek R1 primarily helps English however can generate responses in different languages with various accuracy. Efficiency is dependent upon the coaching information.

6. Can I combine DeepSeek R1 into my functions?

Sure, DeepSeek R1 might be built-in into functions utilizing the Ollama API. Examine the official documentation for API instructions and implementation steps.