In comparison with robotic methods, people are glorious navigators of the bodily world. Bodily processes apart, this largely comes all the way down to innate cognitive talents nonetheless missing in most robotics:

- The power to localize landmarks at various ontological ranges, similar to a “e book” being “on a shelf” or “in the lounge”

- With the ability to shortly decide whether or not there’s a navigable path between two factors primarily based on the atmosphere format

Early robotic navigation methods relied on fundamental line-following methods. These finally advanced into navigation primarily based on visible notion, offered by cameras or LiDAR, to assemble geometric maps. Afterward, Simultaneous Localization and Mapping (SLAM) methods have been built-in to supply the flexibility to plan routes via environments.

About us: Viso Suite is our end-to-end pc imaginative and prescient infrastructure for enterprises. By offering a single location to develop, deploy, handle, and safe the applying growth course of, Viso Suite omits the necessity for level options. Enterprise groups can enhance productiveness and decrease operation prices with full-scale options to speed up the ML pipeline. Ebook a demo with our group of specialists to be taught extra.

Multimodal Robotic Navigation – The place Are We Now?

Newer makes an attempt to endow robotics with the identical capabilities have centered round constructing geometric maps for path planning and parsing targets from pure language instructions. Nonetheless, this strategy struggles in the case of generalizing for brand new or beforehand unseen directions. To not point out environments that change dynamically or are ambiguous ultimately.

Moreover, studying strategies instantly optimize navigation insurance policies primarily based on end-to-end language instructions. Whereas this technique just isn’t inherently unhealthy, it does require huge quantities of information to coach fashions.

Present Synthetic Intelligence (AI) and deep studying fashions are adept at matching object photos to pure language descriptions by leveraging coaching on internet-scale knowledge. Nonetheless, this functionality doesn’t translate effectively to mapping the environments containing the stated objects.

New analysis goals to combine multimodal inputs to boost robotic navigation in advanced environments. As an alternative of basing route planning on one-dimensional visible enter, these methods mix visible, audio, and language cues. This permits for making a richer context and bettering situational consciousness.

Introducing AVLMaps and VLMaps – A New Paradigm for Robotic Navigation?

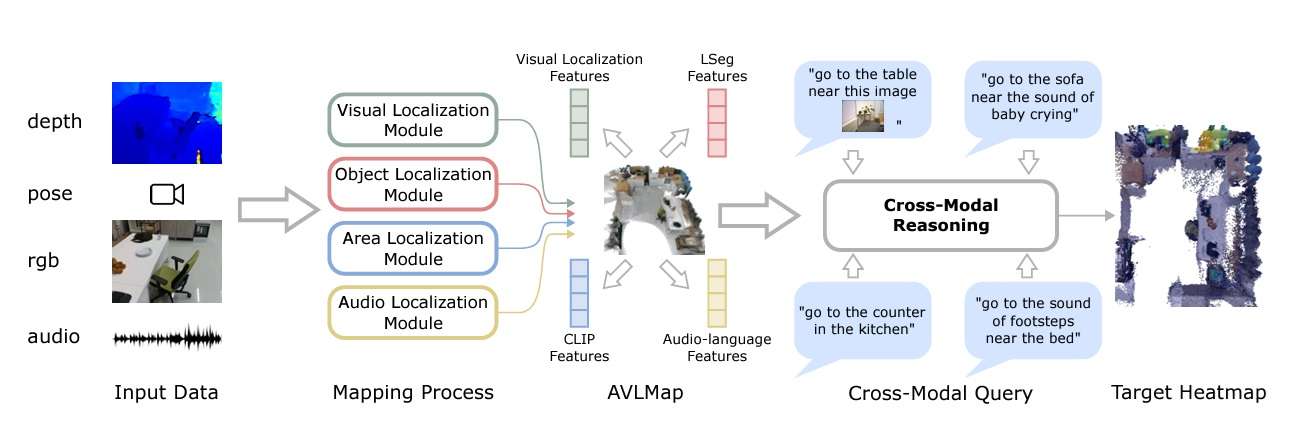

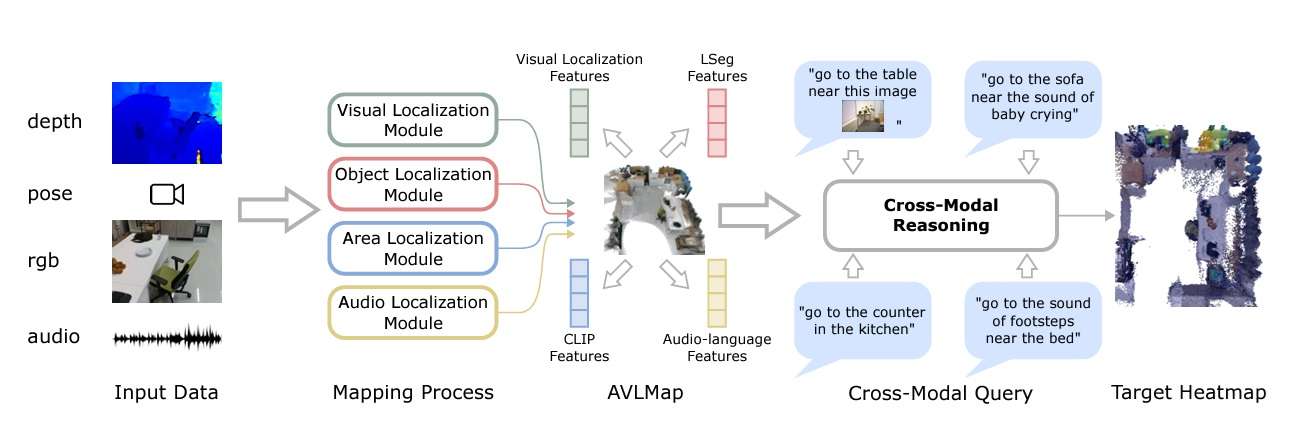

One doubtlessly groundbreaking space of research on this area pertains to so-called VLMaps (Visible Language Maps) and AVLMaps (Audio Visible Language Maps). The latest papers “Visible Language Maps for Robotic Navigation” and “Audio Visible Language Maps for Robotic Navigation” by Chenguang Huang and co. discover the prospect of utilizing these fashions for robotic navigation in nice element.

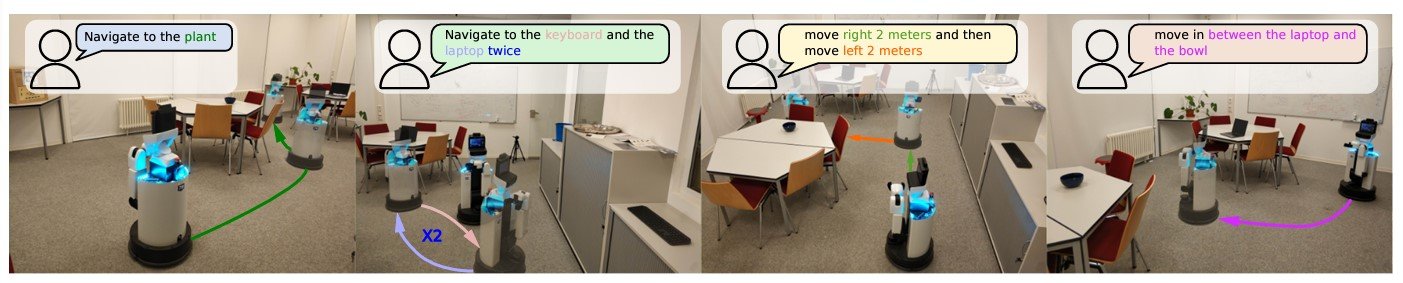

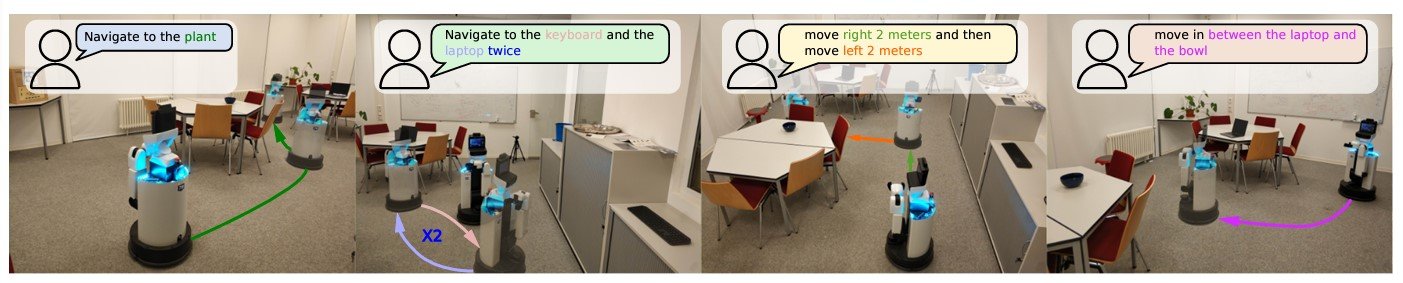

VLMaps instantly fuses visual-language options from pre-trained fashions with 3D reconstructions of the bodily atmosphere. This allows exact spatial localization of navigation targets anchored in pure language instructions. It may possibly additionally localize landmarks and spatial references for landmarks.

The primary benefit is that this permits for zero-shot spatial purpose navigation with out further knowledge assortment or finetuning.

This strategy permits for extra correct execution of advanced navigational duties and the sharing of those maps with completely different robotic methods.

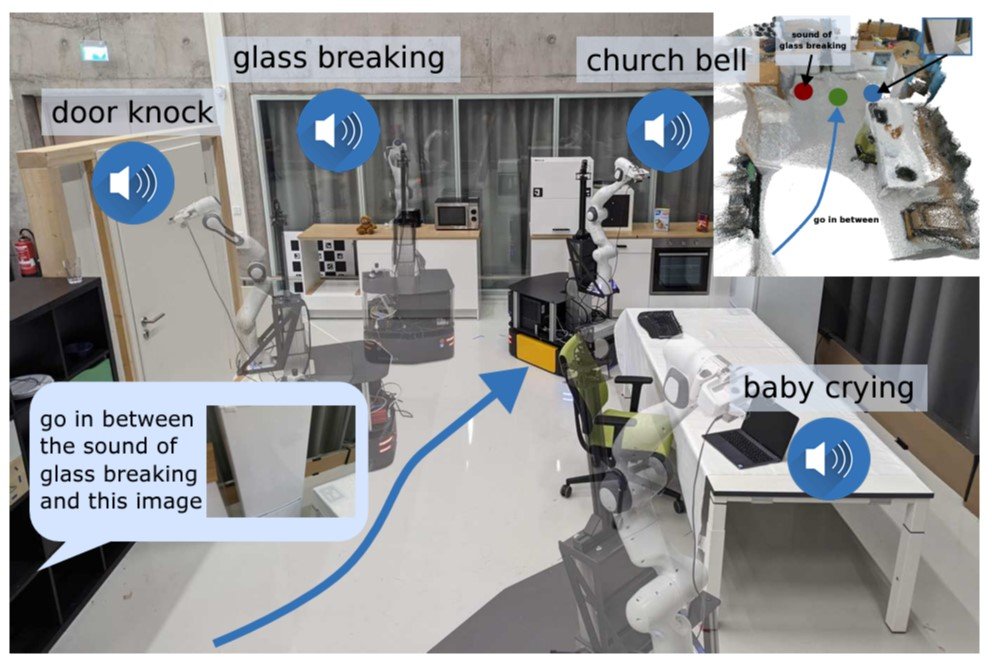

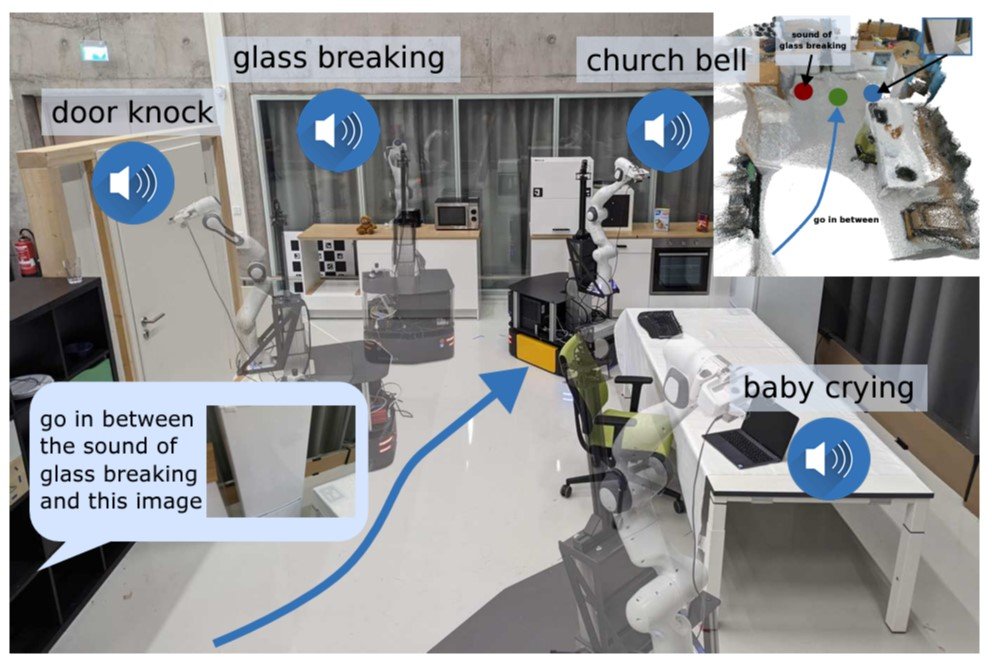

AVLMaps are primarily based on the identical strategy but in addition incorporate audio cues to assemble a 3D voxel grid utilizing pre-trained multimodal fashions. This makes zero-shot multimodal purpose navigation potential by indexing landmarks utilizing textual, picture, and audio inputs. For instance, this could enable a robotic to hold out a navigation purpose similar to “go to the desk the place the beeping sound is coming from.”

Audio enter can enrich the system’s world notion and assist disambiguate targets in environments with a number of potential targets.

VLMaps: Integrating Visible-Language Options with Spatial Mapping

Associated work in AI and pc imaginative and prescient has performed a pivotal position in growing VLMaps. As an example, the maturation of SLAM strategies has vastly superior the flexibility to translate semantic info into 3D maps. Conventional approaches both relied on densely annotated 3D volumetric maps with 2D semantic segmentation Convolutional Neural Networks (CNNs) or object-oriented strategies to construct 3D Maps.

Whereas progress has been made in generalizing these fashions, it’s closely constrained by working on a predefined set of semantic courses. VLMaps overcomes this limitation by creating open-vocabulary semantic maps that enable pure language indexing.

Enhancements in Imaginative and prescient and Language Navigation (VLN) have additionally led to the flexibility to be taught end-to-end insurance policies that comply with route-based directions on topological graphs of simulated environments. Nonetheless, till now, their real-world applicability has been restricted by a reliance on topological graphs and a scarcity of low-level planning capabilities. One other draw back is the necessity for enormous knowledge units for coaching.

For VLMaps, the researchers have been influenced by pre-trained language and imaginative and prescient fashions, similar to LM-Nav and CoW (CLIP on Wheels). The latter performs zero-shot language-based object navigation by leveraging CLIP-based saliency maps. Whereas these fashions can navigate to things, they battle with spatial queries, similar to “to the left of the chair” and “in between the TV and the couch.”

VLMaps lengthen these capabilities by supporting open-vocabulary impediment maps and complicated spatial language indexing. This permits navigation methods to construct queryable scene representations for LLM-based robotic planning.

Key Elements of VLMaps

A number of key parts within the growth of VLMaps enable for constructing a spatial map illustration that localizes landmarks and spatial references primarily based on pure language.

Constructing a Visible-Language Map

VLMaps makes use of a video feed from robots mixed with customary exploration algorithms to construct a visual-language map. The method entails:

- Visible Characteristic Extraction: Utilizing fashions like CLIP to extract visual-language options from picture observations.

- 3D Reconstruction: Combining these options with 3D spatial knowledge to create a complete map.

- Indexing: Enabling the map to help pure language queries, permitting for indexing and localization of landmarks.

Mathematically, suppose VV represents the visible options and LL represents the language options. In that case, their fusion might be represented as M=f(V, L)M = f(V, L), the place MM is the ensuing visual-language map.

Localizing Open-Vocabulary Landmarks

To localize landmarks in VLMaps utilizing pure language, an enter language record is outlined with representations for every class in textual content kind. Examples embody [“chair”, “sofa”, “table”] or [“furniture”, “floor”]. This record is transformed into vector embeddings utilizing the pre-trained CLIP textual content encoder.

The map embeddings are then flattened into matrix kind. The pixel-to-category similarity matrix is computed, with every aspect indicating the similarity worth. Making use of the argmax operator and reshaping the end result offers the ultimate segmentation map, which identifies essentially the most associated language-based class for every pixel.

Producing Open-Vocabulary Impediment Maps

Utilizing a Giant Language Mannequin (LLM), VLMap interprets instructions and breaks them into subgoals, permitting for particular directives like “in between the couch and the TV” or “three meters east of the chair.”

The LLM generates executable Python code for robots, translating high-level directions into parameterized navigation duties. For instance, instructions similar to “transfer to the left facet of the counter” or “transfer between the sink and the oven” are transformed into exact navigation actions utilizing predefined features.

AVLMaps: Enhancing Navigation with Audio, Visible, and Language Cues

AVLMaps largely builds on the identical strategy utilized in growing VLMaps, however prolonged with multimodal capabilities to course of auditory enter as effectively. In AVLMaps, objects might be instantly localized from pure language directions utilizing each visible and audio cues.

For testing, the robotic was additionally supplied with an RGB-D video stream and odometry info, however this time with an audio monitor included.

Module Varieties

In AVLMaps, the system makes use of 4 modules to construct a multimodal options database. They’re:

- Visible Localization Module: Localizes a question picture within the map utilizing a hierarchical scheme, computing each native and international descriptors within the RGB stream.

- Object Localization Module: Makes use of open-vocabulary segmentation (OpenSeg) to generate pixel-level options from the RGB picture, associating them with back-projected depth pixels in 3D reconstruction. It computes cosine similarity scores for all level and language options, deciding on top-scoring factors within the map for indexing.

- Space Localization Module: The paper proposes a sparse topological CLIP options map to establish coarse visible ideas, like “kitchen space.” Additionally, utilizing cosine similarity scores, the mannequin calculates confidence scores for predicting areas.

- Audio Localization Module: Partitions an audio clip from the stream into segments utilizing silence detection. Then, it computes audio-lingual options for every utilizing AudioCLIP to provide you with matching scores for predicting areas primarily based on odometry info.

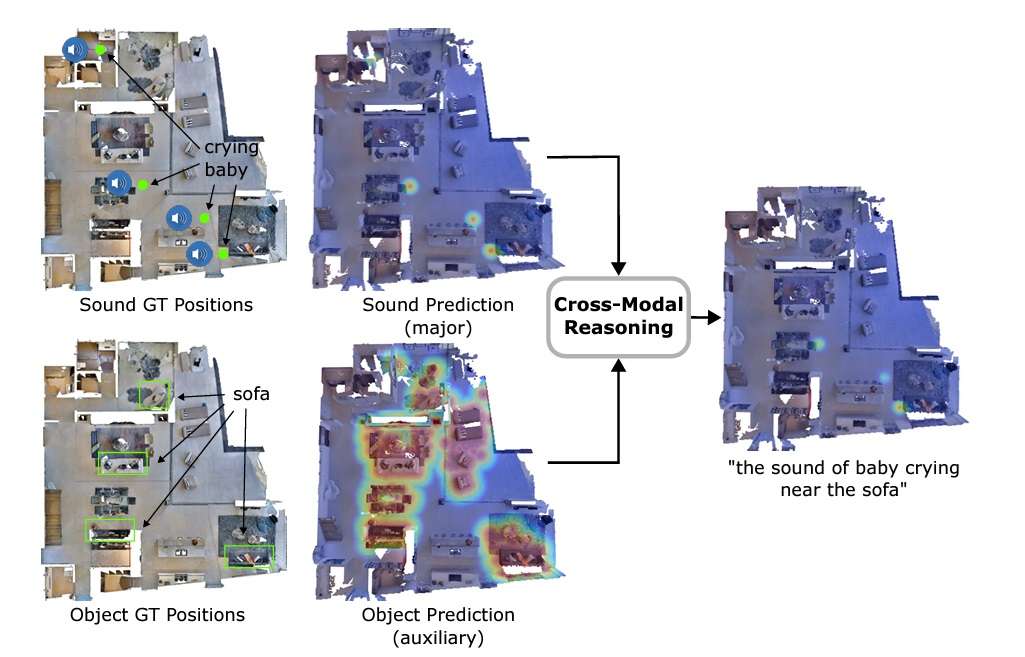

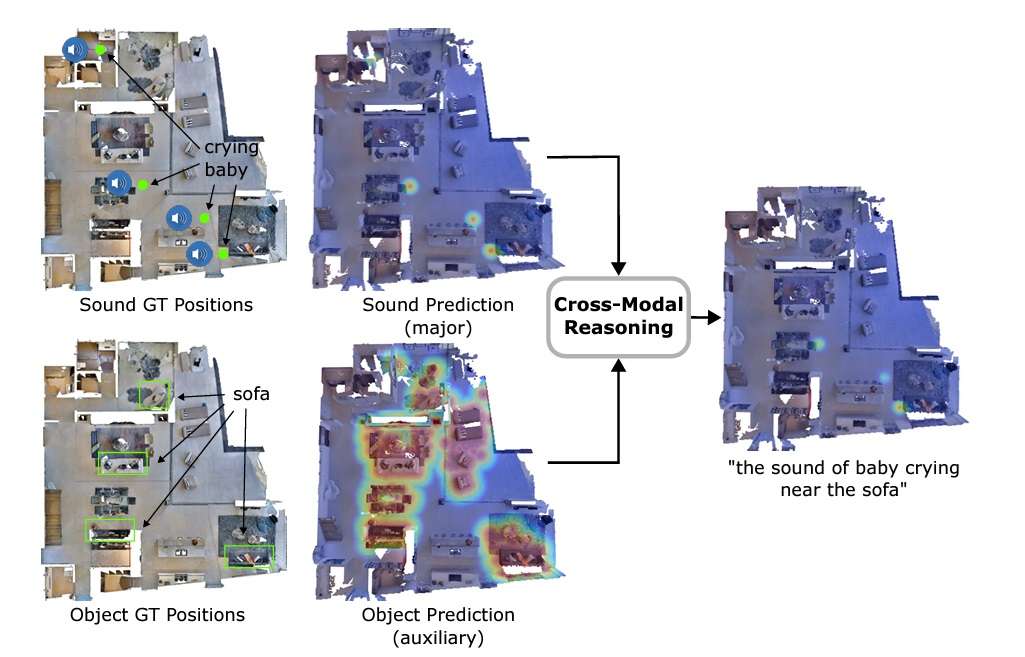

The important thing differentiator of AVLMaps is its potential to disambiguate targets by cross-referencing visible and audio options. Within the paper, that is achieved by creating heatmaps with chances for every voxel place primarily based on the gap to the goal. The mannequin multiplies the outcomes from heatmaps for various modalities to foretell the goal with the best chances.

VLMaps and AVLMaps vs. Different Strategies for Robotic Navigation

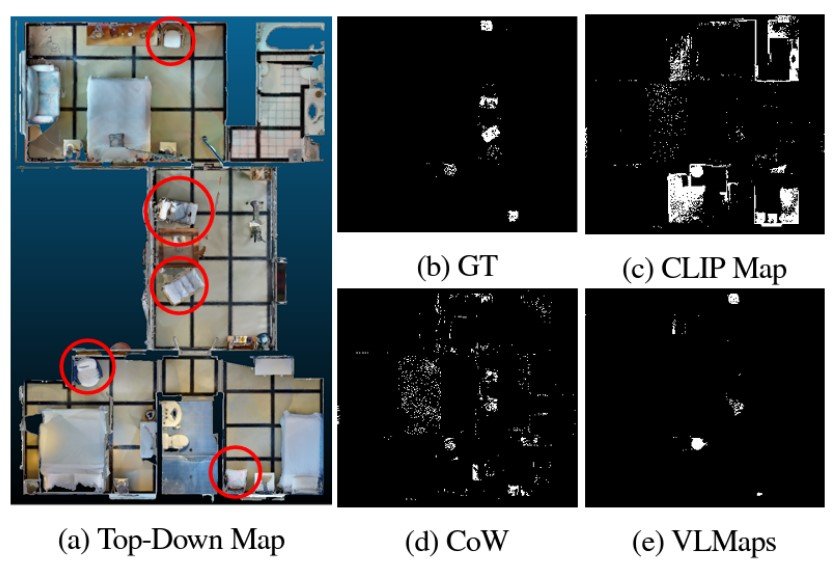

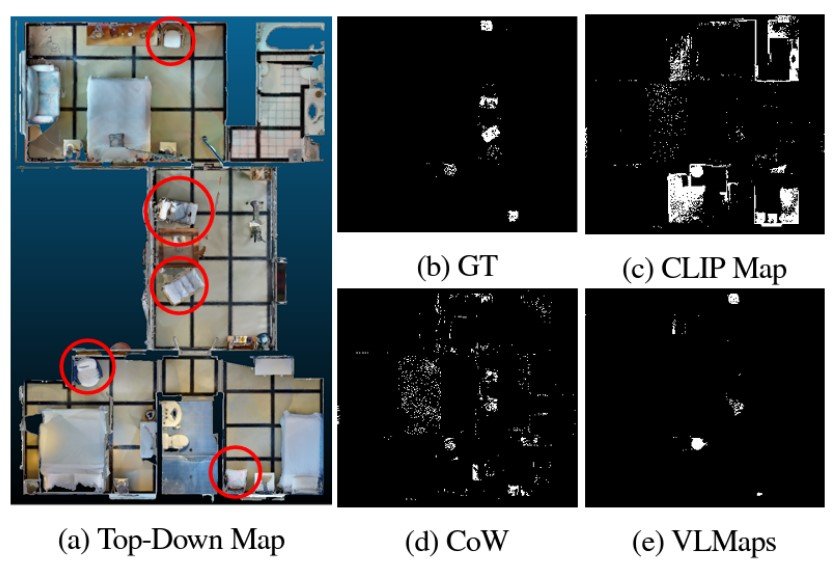

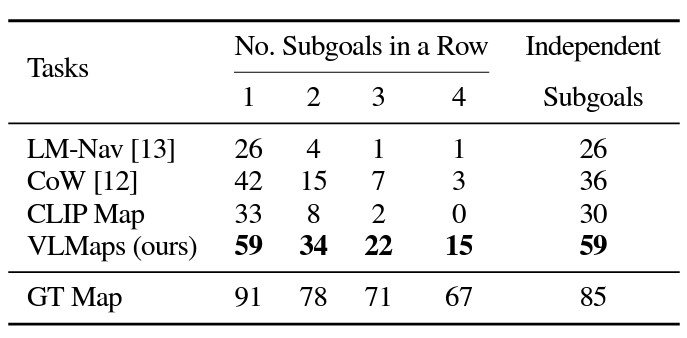

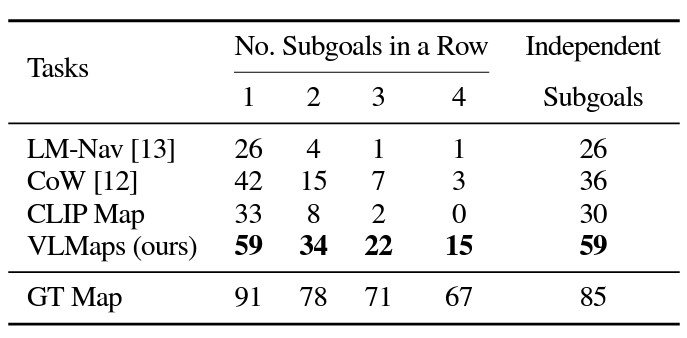

Experimental outcomes present the promise of using strategies like VLMaps for robotic navigation. Trying on the object, numerous fashions have been generated for the article kind “chair,” for instance, it’s clear that VLMaps is extra discerning in its predictions.

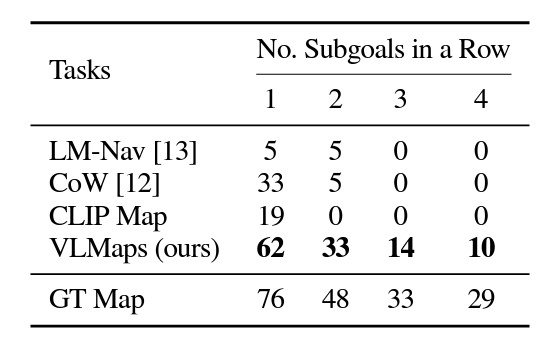

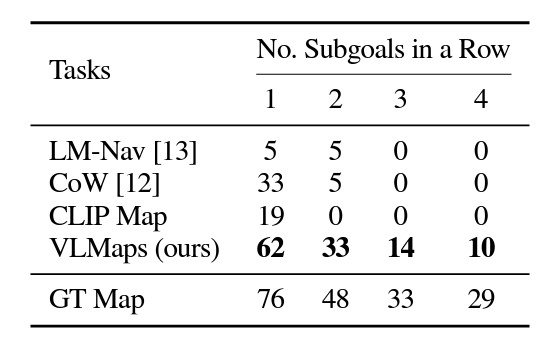

In multi-object navigation, VLMaps considerably outperformed typical fashions. That is largely as a result of VLMaps don’t undergo from producing as many false positives as the opposite strategies.

VLMaps additionally achieves a lot larger zero-shot spatial purpose navigation success charges than the opposite open-vocabulary zero-shot navigation baseline alternate options.

One other space the place VLMaps reveals promising outcomes is in cross-embodiment navigation to optimize route planning. On this case, VLMaps generated completely different impediment maps for robotic embodiments, a ground-based LoCoBot, and a flying drone. When supplied with a drone map, the drone considerably improved its efficiency by creating navigation maps to fly over obstacles. This reveals VLMap’s effectivity at each 2D and 3D spatial navigation.

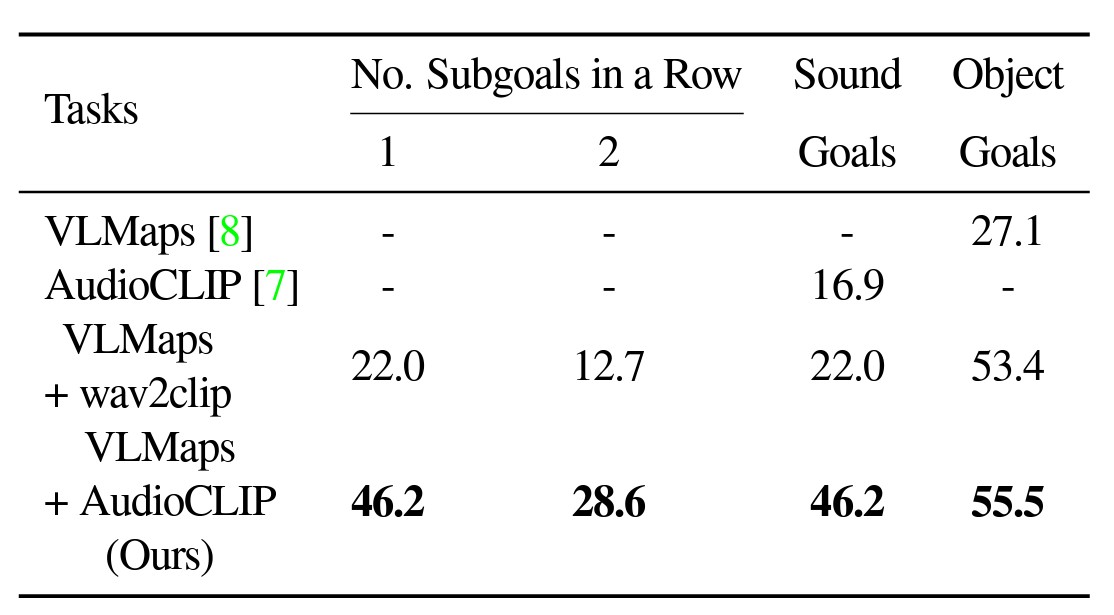

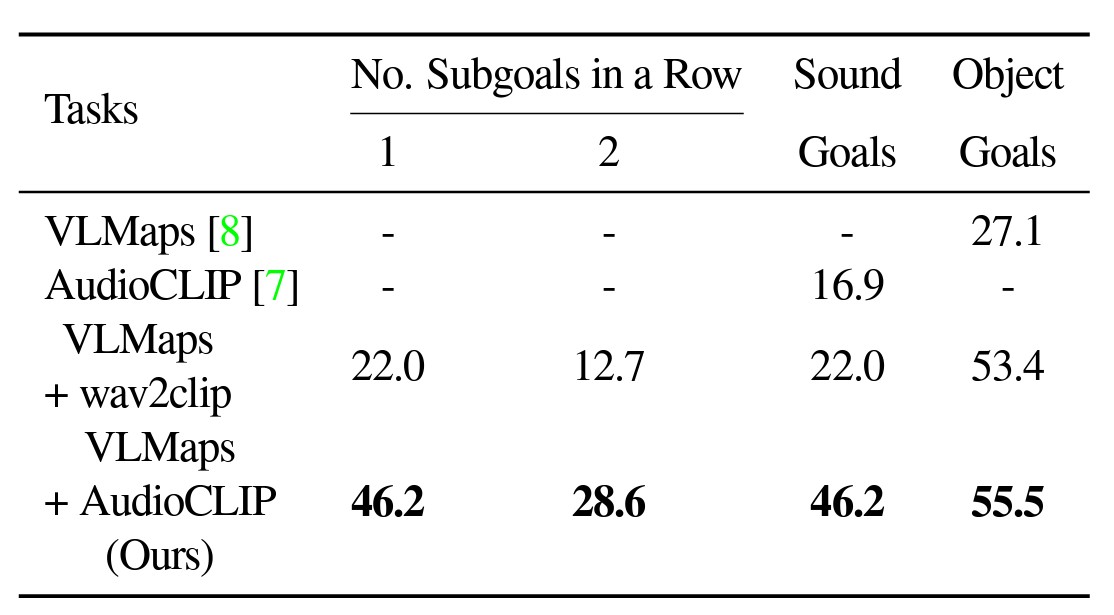

Equally, throughout testing, AVLMaps outperformed VLMaps with each customary AudioCLIP and wav2clip in fixing ambiguous purpose navigation duties. For the experiment, robots have been made to navigate to at least one sound purpose and one object purpose in a sequence.

What’s Subsequent for Robotic Navigation?

Whereas fashions like VLMaps and AVLMaps present potential, there may be nonetheless a protracted option to go. To imitate the navigational capabilities of people and be helpful in additional real-life conditions, we want methods with even larger success charges in finishing up advanced, multi-goal navigational duties.

Moreover, these experiments used fundamental, drone-like robotics. Now we have but to see how these superior navigational fashions might be mixed with extra human-like methods.

One other lively space of analysis is Occasion-based SLAM. As an alternative of relying purely on sensory info, these methods can use occasions to disambiguate targets or open up new navigational alternatives. As an alternative of utilizing single frames, these methods seize adjustments in lighting and different traits to establish environmental occasions.

As these strategies evolve, we are able to count on elevated adoption in fields like autonomous autos, nanorobotics, agriculture, and even robotic surgical procedure.

To be taught extra in regards to the world of AI and pc imaginative and prescient, try the viso.ai weblog: