Do you know that correctly making ready your knowledge can enhance your mannequin’s efficiency?

Strategies like normalization and standardization assist scale knowledge appropriately, main to raised outcomes and simpler interpretation.

Wish to know the distinction between these two methods? Maintain studying we’ll clarify it in a easy approach! However first, let’s rapidly perceive why knowledge preprocessing is necessary in machine studying.

Information preprocessing in Machine Studying

In Machine Studying, you possibly can describe Information preprocessing as the method of making ready uncooked knowledge for ML algorithms. It requires knowledge preprocessing steps reminiscent of Information cleansing (Fixing incorrect or incomplete knowledge), Information discount (Eradicating redundant or irrelevant knowledge), and Information transformation (Changing knowledge to a most popular format).

This course of is a vital a part of ML as a result of it instantly influences the efficiency and precision of the fashions. One of many widespread knowledge preprocessing steps in machine studying is Information scaling, which is the method of modifying the vary of knowledge values with out altering the info itself.

Scaling knowledge is necessary earlier than utilizing it for ML algorithms as a result of it ensures options have a comparable vary, stopping these with bigger values from dominating the training course of.

Through the use of this method, you possibly can enhance mannequin efficiency and get sooner convergence and higher interpretability. ML can detect any vulnerabilities or weaknesses in encryption strategies, making certain they Maintain knowledge safe.

Definitions and Ideas

Information Normalization

In machine studying, knowledge normalization transforms knowledge options to a constant vary( 0 to 1) or an ordinary regular distribution to stop options with bigger scales from dominating the training course of.

It’s also referred to as function scaling, and its major aim is to make the options comparable. It additionally improves the efficiency of ML fashions, particularly these delicate to function scaling.

Normalization methods are used to rescale knowledge values into an analogous vary, which you’ll obtain utilizing strategies like min-max scaling (rescaling to a 0-1 vary) or standardization (reworking to a zero-mean, unit-variance distribution). In ML, knowledge normalization, min-max scaling transforms options to a specified vary utilizing the system given below-

Formulation: X_normalized = (X – X_min) / (X_max – X_min).

The place:

X is the unique function worth.

X_min is the min worth of the function within the dataset.

X_max is the max worth of the function within the dataset.

X_normalized is the normalized or scaled worth.

For instance, think about you might have a dataset with two options: “Room” (starting from 1 to six) and “Age” (starting from 1 to 40). With out normalization, the “Age” function would seemingly dominate the “Room” function in calculations, as its values are bigger. Let’s take a random worth from the above knowledge set to see how normalization works- Room= 2, Age= 30

Earlier than Normalization:

As you possibly can see, the scatter plot exhibits “Age” values unfold way more expansively than “Room” values earlier than normalization, making it tough to search out any patterns between them.

After Normalization:

Utilizing the normalization system X_normalized = (X – X_min) / (X_max – X_min), we get-

Room2_normalized = (2-1)/(6-1 )= 3/5 = 0.6

Age30_normalized = (30-1)/(40-1) = 29/39 = 0.74

0.6 and 0.74 are new normalized values that fall inside the vary of 0-1. If carry out normalized on all of the function values and plotted them we’ll get a distribution similar to the below-

Now, this scatter plot exhibits “Room” and “Age” values scaled to the 0 to 1 vary. This lets you discover a a lot clearer comparability of their relationships.

Information Standardization

Information standardization is called Z-score normalization. It’s one other knowledge preprocessing approach in ML that scales options to have a imply of 0 and an ordinary deviation of 1. This method ensures all options are on a comparable scale.

This method helps ML algorithms, particularly these delicate to function scaling like k-NN, SVM, and linear regression, to carry out higher. Moreover, it prevents options with bigger scales from dominating the mannequin’s output and makes the info extra Gaussian-like, which is useful for some algorithms.

Standardization transforms the info by subtracting the imply of every function and dividing it by its normal deviation. Its system is given below-

Formulation: X’ = (X – Imply(X)) / Std(X)

The place:

X is the unique function worth.

X’ is the standardized function worth.

Imply(X) is the imply of the function.

Std(X) is the usual deviation of X.

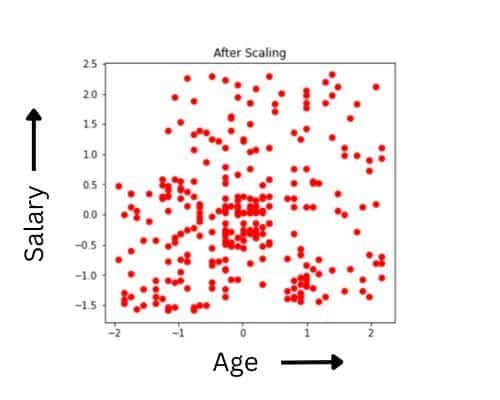

For instance, here’s a dataset with massive function values: “Wage” (starting from 0 to 140000) and “Age” (starting from 0 to 60). Right here, you possibly can see that with out standardization, the “Wage” function would seemingly dominate the “Age” function in calculations because of bigger function values.

To grasp it clearly, let’s assume a random worth from the above knowledge set to see how standardization works-

Earlier than standardization

Let’s say a function has values:

Wage= 100000, 115000, 123000, 133000, 140000

Age= 20, 23, 30, 38, 55

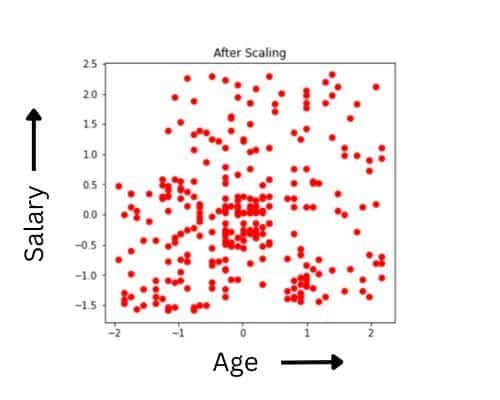

After standardization

Utilizing system X' = (X - Imply(X)) / Std(X)

Wage= 100000, 115000, 123000, 133000, 140000

Imply(X) = 122200

Normal Deviation Std(X) = 15642.89 (roughly)

Standardized values:

Wage X'= (100000 - 122200) / 15642.89 = -1.41

(115000 - 122200) / 15642.89 = -0.46

(123000 - 122200) / 15642.89 = 0.05

(133000 - 122200) / 15642.89 = 0.69

(140000 - 122200) / 15642.89 = 1.13

Equally

Age X'=-0.94, -0.73, -0.22, 0.34, 1.55

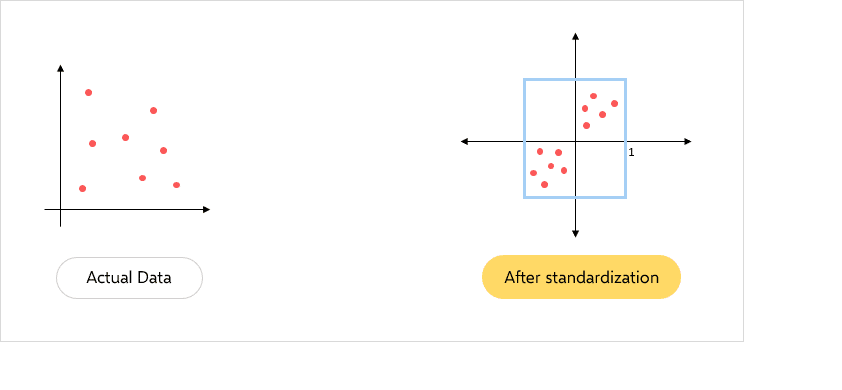

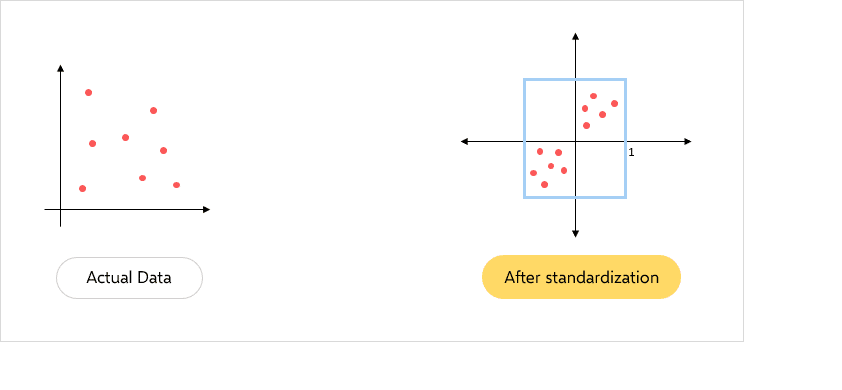

Earlier than and after the normalization, each the plots would be the similar. The one distinction is within the X and Y scales. After normalization, the imply has shifted in direction of the origin.

Key Variations

| Parameter | Normalization | Standardization |

| Definition | Transforms knowledge options to a constant vary 0 to 1 | Scales function to have a imply of 0 and an ordinary deviation of 1 |

| Objective | To alter the size of the options in order that they match inside a selected vary for straightforward function comparability. | To alter the distribution of the options to an ordinary regular distribution to stop options with bigger scales from dominating the mannequin’s output. |

| Formulation | X_normalized = (X – X_min) / (X_max – X_min) | (X – Imply(X)) / Std(X) |

| Dependency on Distribution | Doesn’t have a dependency on the distribution of the info. | Assumes the distribution of knowledge is regular. |

| Sensitivity to Outliers | Much less delicate to outliers because it requires exact methods to regulate for outliers. | Extremely delicate to outliers as min and max are influenced by excessive values, offering a constant method to fixing outlier issues. |

| Impression on the Form of Plot | If there are important outliers, the plot could be modified. | Maintains the unique form of the plot however aligns it to an ordinary scale. |

| Use Circumstances | Helpful for ML algorithms, notably these delicate to function scales, e.g., neural networks, SVM, and k-NN. | Helpful for ML algorithms that assume knowledge is generally distributed or options have vastly completely different scales, e.g., Cluster fashions, linear regression, and logistic regression. |

Information Normalization vs. Standardization: Scale and Distribution

1. Impact on Information Vary:

- Normalization: As we noticed earlier, Normalization instantly modifies the vary of the info to make sure all values fall inside the outlined boundaries. It’s preferable when you’re unsure concerning the precise function distribution or the info distribution doesn’t match the Gaussian distribution. Thus, this system affords a dependable method to assist the mannequin carry out higher and extra precisely.

- Standardization: Then again, it doesn’t have a predefined vary, and the reworked knowledge can have values outdoors of the unique vary. Thus, this methodology may be very efficient if the function distribution of the info is understood or the info distribution matches the Gaussian distribution.

2. Impact on Distribution:

- Normalization: Normalization doesn’t inherently change the form of the distribution; it primarily focuses on scaling the info inside a selected vary.

- Standardization: Quite the opposite, Standardization primarily focuses on the distribution, centering the info round a imply of 0 and scaling it to an ordinary deviation of 1.

Use instances: Information Normalization vs. Standardization

Situations and fashions that profit from normalization:

Normalization advantages a number of ML fashions, notably these delicate to function scales. For example-

- Fashions reminiscent of PCA, neural networks, and linear fashions like linear/logistic regression, SVM, and k-NN significantly profit from normalization.

- In Neural Networks, normalization is an ordinary observe as it could actually result in sooner convergence and higher generalization efficiency.

- Normalization additionally decreases varied results because of scale variations of enter options because it makes the inputs extra congruous. This fashion, normalizing enter for an ML mannequin improves convergence and coaching stability.

Situations and fashions that profit from standardization:

Numerous ML fashions in addition to these coping with knowledge the place options have vastly completely different scales, profit considerably from knowledge standardization. Listed below are some examples:

- Help Vector Machine (SVM) usually requires Standardization because it maximizes the span between the assist vectors and the separating aircraft. Thus, Standardization is required when computing the span distance to make sure one function gained’t dominate one other function if it assumes a big worth.

- Clustering fashions include algorithms that work based mostly on distance metrics. Meaning options with bigger values will exert a extra important impact on the clustering consequence. Thus, it’s important to standardize the info earlier than creating a clustering mannequin.

- You probably have a Gaussian knowledge distribution, standardization is more practical than different methods. It really works finest with a traditional distribution and advantages ML algorithms assuming a Gaussian distribution.

Benefits and Limitations

Benefits of Normalization

Normalization has varied benefits that make this system extensively in style. A few of them are listed below-

- Excessive Mannequin Accuracy: Normalization helps algorithms make extra correct predictions by stopping options with bigger scales from dominating the training course of.

- Quicker Coaching: It will possibly velocity up the coaching means of fashions and assist them converge extra rapidly.

- Higher Dealing with of Outliers: It will possibly additionally scale back the affect of outliers and forestall them from having an undue affect on the mannequin.

Limitations of Normalization

Normalization absolutely has its benefits however it additionally carries some drawbacks that may have an effect on your mannequin efficiency.

- Lack of Info: Normalization can generally result in a lack of info, largely in instances the place the unique vary or distribution of the info is significant or essential for the evaluation. For instance, when you normalize a function with a wide range, the unique scale is likely to be misplaced, doubtlessly making it tougher to interpret the function’s contribution.

- Elevated Computational Complexity: It provides an additional step to the info preprocessing pipeline, which may improve computational time, particularly for big datasets or real-time purposes.

Benefits of Standardization

Standardization additionally has its edge over different methods in varied eventualities.

- Improved Mannequin Efficiency: Standardization ensures options are on the identical scale, thus permitting algorithms to study extra successfully, in the end enhancing the efficiency of ML fashions.

- Simpler Comparability of Coefficients: It permits you a direct comparability of mannequin coefficients, as they’re not influenced by completely different scales.

- Outlier Dealing with: It will possibly additionally assist mitigate the affect of outliers, as they’re much less more likely to dominate the mannequin’s output.

Limitations of Standardization

Standardization, whereas helpful for a lot of machine studying duties, additionally has drawbacks. A few of its main drawbacks are listed below-

- Lack of Unique Unit Interpretation: Standardizing knowledge transforms values right into a standardized scale (imply of 0, normal deviation of 1), which may make it tough to interpret the info in its authentic context.

- Dependency on Normality Assumption: It assumes that the info follows a traditional distribution. If the info is just not usually distributed, making use of standardization may not be acceptable on your mannequin and will result in deceptive outcomes.

Conclusion

Characteristic scaling is a vital a part of knowledge preprocessing in ML. A radical understanding of the appropriate approach for every dataset can considerably improve the efficiency and accuracy of fashions.

For instance, normalization proves to be notably efficient for distance-based and gradient-based algorithms. Then again, you need to use standardization for algorithms that embody weights and those who assume a traditional distribution. In a way, you need to choose essentially the most appropriate approach for the precise situation at hand, as each approaches can yield important advantages when utilized appropriately.