Massive Motion Fashions (LAMs) are deep studying fashions that goal to know directions and execute advanced duties and actions accordingly. LAMs additionally mix language understanding with reasoning and software program brokers.

Though nonetheless underneath analysis and growth, these fashions could be a transformative pressure within the Synthetic Intelligence (AI) world. LAMs signify a big leap past textual content technology and understanding. They’ve the potential to revolutionize how we work and automate duties throughout many industries.

We are going to discover how Massive Motion Fashions work, their numerous capabilities in real-world purposes, and uncover some open-source fashions. Prepare for a journey in Massive Motion Fashions, the place AI isn’t just speaking, however taking motion.

What Are Massive Motion Fashions and How Do They Work?

Massive Motion Fashions (LAMs) are AI software program designed to take motion in a hierarchical method the place duties are damaged down into smaller subtasks. Actions are carried out from user-given directions utilizing brokers.

Not like giant language fashions, a Massive Motion Mannequin combines language understanding with logic and reasoning to execute varied duties. This method can usually study from suggestions and interactions, though to not be confused with reinforcement studying.

Neuro-symbolic programming has been an necessary method in creating extra succesful Massive Motion Fashions. This system combines studying capabilities and logical reasoning from neural networks and symbolic AI. By combining the perfect of each worlds, LAMs can perceive language, purpose about potential actions, and execute based mostly on instruction.

The structure of a Massive Motion Mannequin can range relying on the wide selection of duties it could possibly carry out. Nevertheless, understanding the variations between LAMs and LLMs is important earlier than diving into their elements.

LLMs VS. LAMs

| Characteristic | Massive Language Fashions (LLMs) | Massive Motion Fashions (LAMs) |

|---|---|---|

| What can it do | Language Era | Process Execution and Completion |

| Enter | Textual knowledge | Textual content, pictures, instruction, and so forth. |

| Output | Textual knowledge | Actions, Textual content |

| Coaching Knowledge | Massive textual content company | Textual content, code, pictures, actions |

| Utility Areas | Content material creation, translation, chatbots | Automation, decision-making, advanced interactions |

| Strengths | Language understanding, textual content technology | Reasoning, planning, decision-making, real-time interplay |

| Weaknesses | Restricted reasoning, lack of motion capabilities | Nonetheless underneath growth, moral considerations |

Now we will delve deeper into the precise elements of a giant motion mannequin. These elements often are:

- Sample-Recognition: Neural networks

- Symbolic AI: Logical Reasoning

- Motion Mannequin: Execute Duties (Brokers)

Neuro-Symbolic Programming

Neuro-symbolic AI combines neural networks’ means to study patterns with symbolic AI reasoning strategies, creating a strong synergy that addresses the constraints of every method.

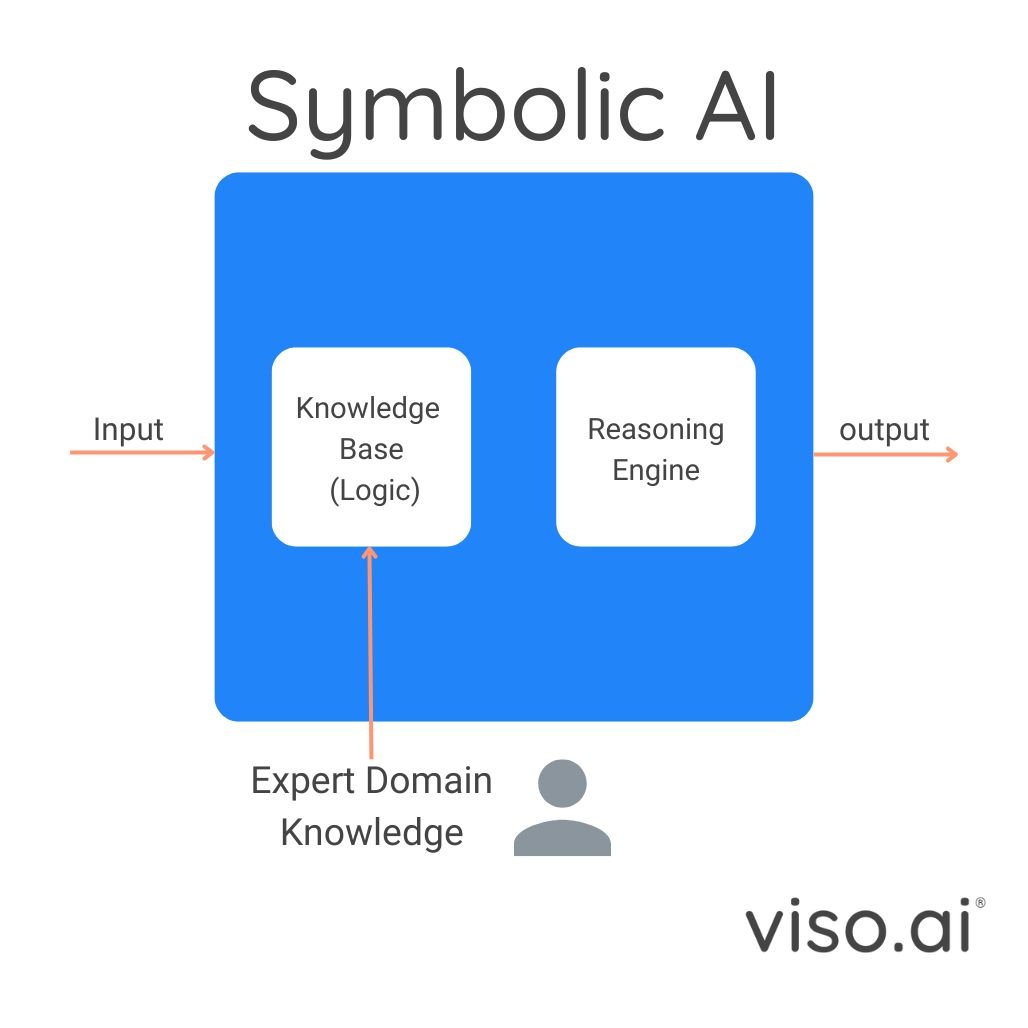

Symbolic AI, usually based mostly on logic programming (principally a bunch of if-then statements) excels at reasoning and offering explanations for its choices. It makes use of formal languages, like first-order logic, to signify data and an inference engine to attract logical conclusions based mostly on person queries.

This means to hint outputs to the foundations and data throughout the program makes the symbolic AI mannequin extremely interpretable and explainable. Moreover, it permits us to develop the system’s data as new data turns into obtainable. However this method alone has its limitations:

- New guidelines don’t undo outdated data

- Symbols usually are not linked to representations or uncooked knowledge.

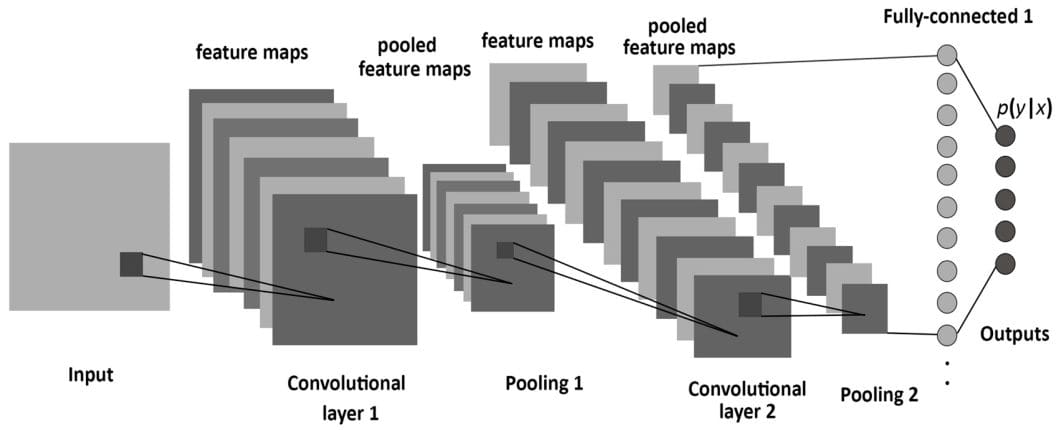

In distinction, the neural facet of neuro-symbolic programming includes deep neural networks like LLMs and imaginative and prescient fashions, which thrive on studying from huge datasets and excel at recognizing patterns inside them.

This sample recognition functionality permits neural networks to carry out duties like picture classification, object detection, and predicting the subsequent phrase in NLP. Nevertheless, they lack the specific reasoning, logic, and explainability that symbolic AI presents.

Neuro-symbolic AI goals to merge these two strategies, giving us applied sciences like Massive Motion Fashions (LAMs). These techniques can mix the highly effective pattern-recognition skills of neural networks with the symbolic AI reasoning capabilities, enabling them to purpose about summary ideas and generate explainable outcomes.

Neuro-symbolic AI approaches could be broadly categorized into two fundamental varieties:

- Compressing structured symbolic data right into a format that may be built-in with neural community patterns. This enables the mannequin to purpose utilizing the mixed data.

- Extracting data from the patterns discovered by neural networks. This extracted data is then mapped to structured symbolic data (a course of referred to as lifting) and used for symbolic reasoning.

Motion Engine

In Massive Motion Fashions (LAMs), neuro-symbolic programming empowers neural fashions like LLMs with reasoning and planning skills from symbolic AI strategies.

The core idea of AI brokers is used to execute the generated plans and presumably adapt to new challenges. Open-source LAMs usually combine logic programming with imaginative and prescient and language fashions, connecting the software program to instruments and APIs of helpful apps and providers to carry out duties.

Let’s see how these AI brokers work.

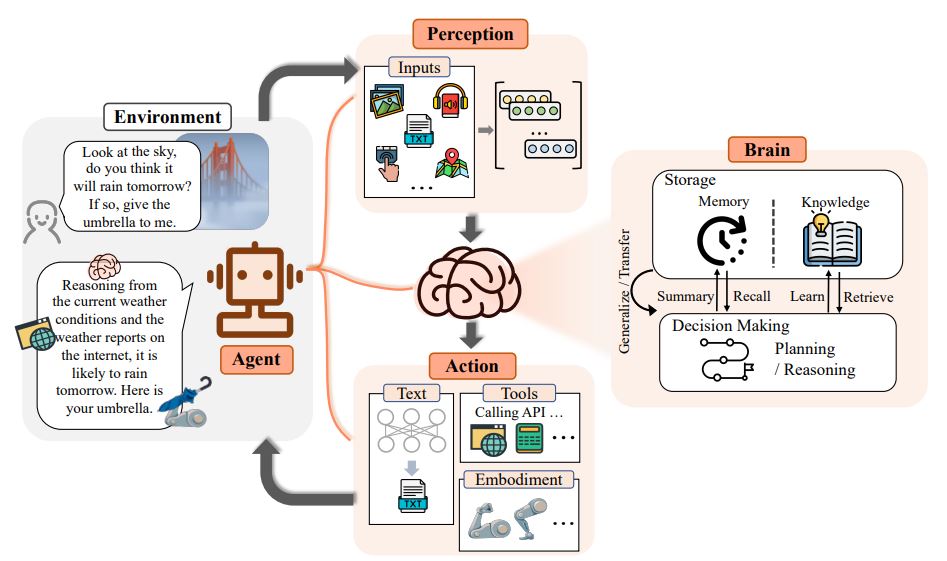

An AI agent is software program that may perceive its setting and take motion. Actions rely upon the present state of the setting and the given circumstances or data. Moreover, some AI brokers may adapt to adjustments and study based mostly on interactions.

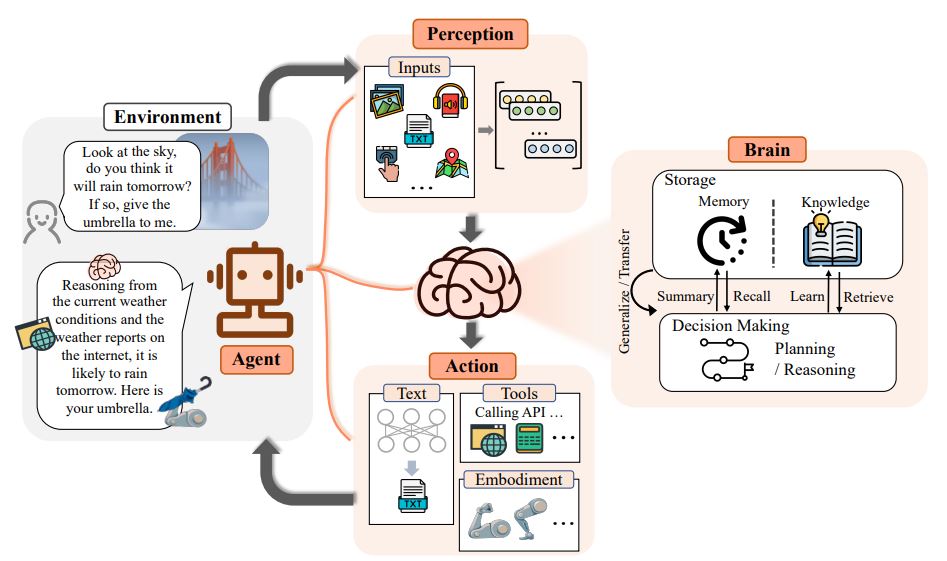

Utilizing the visualization above, let’s put the best way a Massive Motion Mannequin makes our requests into motion:

- Notion: A LAM receives enter as voice, textual content, or visuals, accompanied by a job request.

- Mind: This might be the neuro-symbolic AI of the Massive Motion Mannequin, which incorporates capabilities to plan and purpose in addition to memorize and study or retrieve data.

- Agent: That is how the massive motion mannequin takes motion, as a person interface or a tool. It analyzes the given enter job utilizing the mind after which takes motion based mostly on that.

- Motion: That is the place the doing begins. The mannequin outputs a mixture of textual content, visuals, and actions. For instance, the mannequin may reply to a question utilizing an LLM functionality to generate textual content and take motion based mostly on the reasoning capabilities of symbolic AI. The motion includes breaking down the duty into subtasks, performing every subtask utilizing options like calling APIs or leveraging apps, instruments, and providers via the agent software program program.

What Can Massive Motion Fashions Do?

Massive Motion Fashions (LAMs) can virtually do any job they’re skilled to do. By understanding human intention and responding to advanced directions, LAMs can automate easy or advanced duties, and make choices based mostly on textual content and visible enter. Crucially, LAMs usually can incorporate explainability permitting us to hint their reasoning course of.

Rabbit R1 is likely one of the hottest giant motion fashions and an excellent instance to showcase the ability of those fashions. Rabbit R1 combines:

- Imaginative and prescient duties

- Internet portal for connecting providers and purposes and including new duties with train mode.

- Educate mode permits customers to instruct and information the mannequin by doing the duty themselves.

Whereas the time period giant motion fashions already existed and was an ongoing space of analysis and growth, Rabbit R1 and its OS popularized it. Open-source alternate options existed, usually incorporating comparable rules of logic programming and imaginative and prescient/language fashions to work together with APIs and carry out actions based mostly on person requests.

Open-Supply Fashions

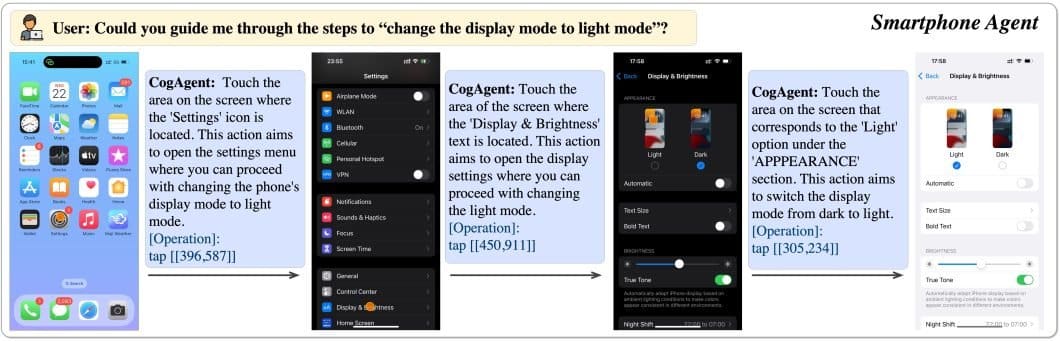

1. CogAgent

CogAgent is an open-source Motion Mannequin, based mostly on CogVLM, an open-source imaginative and prescient language mannequin. It features as a visible agent able to producing plans, figuring out the subsequent motion, and offering exact coordinates for particular operations inside any given GUI screenshot.

This mannequin may do visible query answering (VQA) on any GUI screenshot, and OCR-related duties.

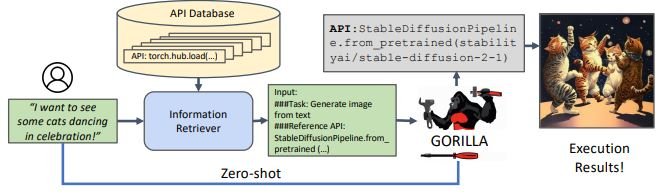

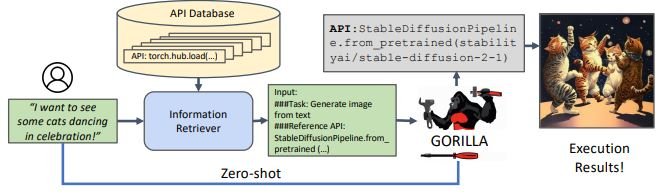

2. Gorilla

Gorilla is one spectacular open-source giant motion mannequin because it empowers LLMs to make the most of hundreds of instruments via exact API calls. It precisely identifies and executes the suitable API name, by understanding the wanted motion from pure language queries. This method has efficiently invoked over 1,600 (and rising) APIs with distinctive accuracy whereas minimizing hallucination.

Gorilla makes use of its proprietary execution engine, GoEx, as a runtime setting for executing LLM-generated plans, together with code execution and API calls.

The visualization above exhibits a transparent instance of huge motion fashions in work. Right here, the person desires to see a particular picture, and the mannequin retrieves the wanted motion from the data database and executes the wanted code via an API name, all in a zero-shot method.

Actual-World Purposes of Massive Motion Fashions

The facility of Massive Motion Fashions (LAMs) is reaching into many industries, reworking how we work together with expertise and automate advanced duties. LAMs are proving their price as a complete software.

Let’s delve into some examples the place giant motion fashions could be utilized.

- Robotics: Massive Motion Fashions might be helpful to create extra clever and autonomous robots able to understanding and responding. This enhances human-robot interplay and opens new avenues for automation in manufacturing, healthcare, and even house exploration.

- Buyer Service and Assist: Think about a customer support AI agent who understands a buyer’s downside and may take fast motion to resolve it. LAMs could make this a actuality, by streamlining processes like ticket decision, refunds, and account updates.

- Finance: Within the monetary sector, LAMs can analyze advanced knowledge based mostly on knowledgable enter, and supply customized suggestions and automation for investments and monetary planning.

- Schooling: Massive Motion Fashions may rework the academic sector by providing customized studying experiences relying on every scholar’s wants. They will present immediate suggestions, assess assignments, and generate adaptive instructional content material.

These examples spotlight only a few methods LAMs can revolutionize industries and improve our interplay with expertise. Analysis and growth in Massive Motion Fashions are nonetheless within the early phases, and we will count on them to unlock additional prospects.

What’s Subsequent For Massive Motion Fashions?

Massive Motion Fashions (LAMs) may probably redefine how we work together with expertise and automate duties throughout varied domains. Their distinctive means to know directions, purpose with logic, make choices, and execute actions, all this has immense potential. From enhancing customer support to revolutionizing robotics and schooling, LAMs provide a glimpse right into a future the place AI-powered brokers seamlessly combine into our lives.

As analysis progresses, we will anticipate LAMs changing into extra subtle, able to dealing with even excessive stage advanced duties and understanding domain-specific directions. Nevertheless, as with every energy comes duty. Guaranteeing the security, equity, and moral use of LAMs is essential.

Addressing challenges like bias in coaching knowledge and potential misuse might be important as we proceed to develop and deploy these highly effective fashions. The way forward for LAMs is brilliant. As they evolve, these fashions can have a job in shaping a extra environment friendly, productive, and human-centered technological panorama.